I recently had to dig quite deeply into how digital colors and, by destination, colorspaces work. I have learned so much about it, and know I still have so much to learn, so I’ll start with a disclaimer: I am not a developer nor a color scientist, I have notions in both domains but won’t argue that I’m a specialist in either one. So what I write in this article is only my understanding of how these things work and may be erroneous (feel free to contact me if you see any mistakes).

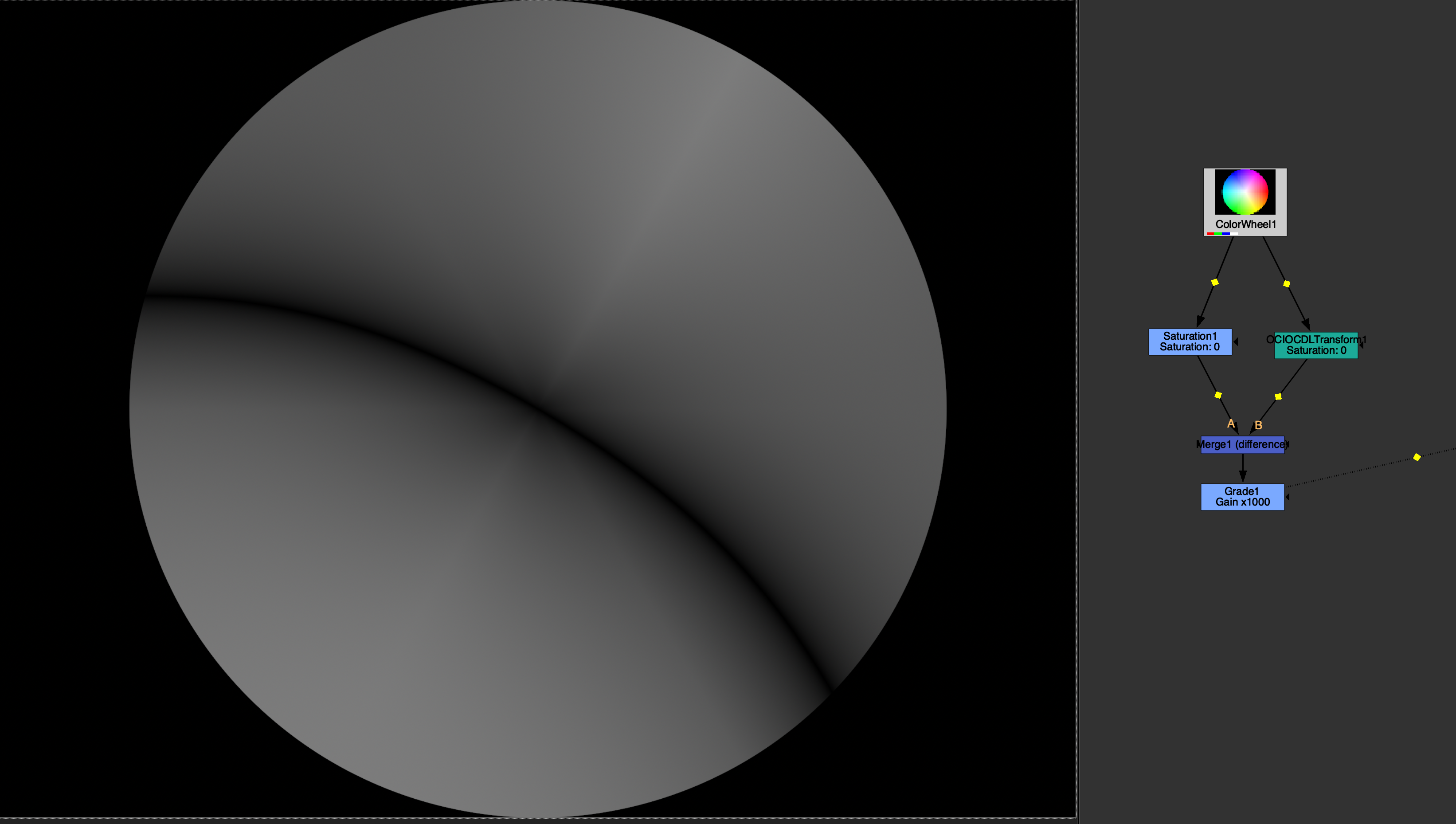

Let’s go straight to the subject that struck me when playing with Nuke’s OCIO toolset, here’s a screenshot of a test you can do in Nuke :

I won’t go into the debate of whether you should or not use any saturation knob in your comp, in my opinion this kind of axiom is usually irrelevant, as is really depends on the context and why you’d want to use it for; and in our case, this is out of the bounds of this article. Actually, the first question that should be asked isn’t if we should use it or not, but whether if the one we’re using does work properly or not. Again, a lot could be said about colors and the way they are handled in our software (mainly Nuke in my case), and I really recommend that you dig into the subject as deep as you can, as it is such a fascinating world, made by brilliant people.

Just a little recap

When talking about saturation, the easiest path is to start with black and white. It might be counter-intuitive to talk about the « no colors » when the subject is specifically bout colors, but as you already know, we’re actually talking about the same thing. Black and white is just all three channels, red, green and blue, having the same value; so that none seems more present than another, hence giving the impression of no color being present. This is the case when the saturation knob is at 0, all channels in a pixel are set to the same value. But where does this value come from and what does it represent ?

The first and most intuitive way to treat this, is to take the average of every channel. This sounds good until you realize that our eyes (and actually most cameras) don’t see light this way. For some reasons, that I won’t go into as my biology level is.. well.. I won’t go into this; our eyes are much more sensitive to green light, then red light, and lastly, blue light. So the engineers who created the standards chose to follow this path, and gave more place to the green channel, than the red, than the blue. But what standard are we talking about ?

Rec, ITU-R, BT 709… Sorry what’s your name again ?

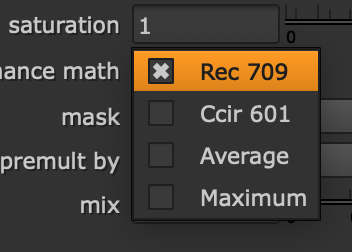

UIT-R BT 709, usually called « Rec 709 » (« Rec » for recommendation as it seems) is the standard for image encoding and signal characteristics of high definition television (thanks wikipedia), and amongst a lot of parameters, specifies the values of each coefficient for every channel. And these are still used today, in Nuke’s Saturation node by default, as well as in OpenColorIO’s processing when dealing with non-HDR data. So now we have some universal, standardize values for our saturation ! What could go wrong ?

To understand why we don’t get the same result using Nuke’s Saturation and OpenColorIO’s CDLTransform‘s saturation, both claiming to use the same standard, we have to go beyond the theory level and see how this is implemented in the software. Don’t get me wrong, the difference is really not obvious, invisible to the naked eye, but still there when comparing both result with a Difference node.

Opening the source

Thankfully, the answer to my question is quite easy to find, as OpenColorIO is fully open source, and The Foundry gives Nuke’s Saturation source code as an example in the NDK. Let’s start with Nuke’s source, the full code is quite big so I isolated the part that is interesting to us:

switch (mode) {

case REC709:

while (rIn < END) {

y = y_convert_rec709(*rIn, *gIn, *bIn);

*rOut++ = lerp(y, *rIn++, fSaturation);

*gOut++ = lerp(y, *gIn++, fSaturation);

*bOut++ = lerp(y, *bIn++, fSaturation);

}

break;

case CCIR601:

while (rIn < END) {

y = y_convert_ccir601(*rIn, *gIn, *bIn);

*rOut++ = lerp(y, *rIn++, fSaturation);

*gOut++ = lerp(y, *gIn++, fSaturation);

*bOut++ = lerp(y, *bIn++, fSaturation);

}

break;

case AVERAGE:

while (rIn < END) {

y = y_convert_avg(*rIn, *gIn, *bIn);

*rOut++ = lerp(y, *rIn++, fSaturation);

*gOut++ = lerp(y, *gIn++, fSaturation);

*bOut++ = lerp(y, *bIn++, fSaturation);

}

break;

case MAXIMUM:

while (rIn < END) {

y = y_convert_max(*rIn, *gIn, *bIn);

*rOut++ = lerp(y, *rIn++, fSaturation);

*gOut++ = lerp(y, *gIn++, fSaturation);

*bOut++ = lerp(y, *bIn++, fSaturation);

}

break;

}This part of the pixel_engine method is applying the maths according to the luminance math knob. We can see that in the case where Rec 709 is selected, the function y_convert_rec709() is called. This function isn’t present in the file, it is imported from another file DDImage/RGB.h. This is the file that contains the function which gives us the coefficients used by the Saturation node in Nuke.

static inline float y_convert_rec709(float r, float g, float b)

{

return r * 0.2125f + g * 0.7154f + b * 0.0721f;

}These are the coefficients used by the Saturation Node. Now let’s look at OpenColorIO’s ones. As said earlier, I was comparing the Saturation node with the OCIOCDLTransform, so that’s where I started searching. There I found the getSatLumaCoefs() method :

void CDLTransformImpl::getSatLumaCoefs(double * rgb) const

{

if (!rgb)

{

throw Exception("CDLTransform: Invalid 'luma' pointer");

}

rgb[0] = 0.2126;

rgb[1] = 0.7152;

rgb[2] = 0.0722;

}There I got my answer, the coefficients used by both nodes aren’t the same ! So who’s right ? Well this article’s title spoils it a bit, but Nuke isn’t totally off in a way.

The thing is, a standard isn’t set in stone, it evolves with time as technology does. Actually, Rec 709’s full actual name is ITU-R BT.709-6. And yes, the 6 is here because we’re at the 6th revision of the standard. The first version of Rec 709 was released in 1993, with the firsts coefficients, which are the ones Nuke is using today. The first revision was published in 1995, which changed the luma coefficients for the ones we are using nowadays. End of the story.

I did a support ticket to the Foundry to let them know, and ask if there was a reason. I didn’t receive any answer about the reason but they assured me that they will update Nuke with the « new » coefficients. As I’m writing this article, Nuke’s current version is 16.0v2 and the coefficients haven’t been updated yet. To be fair, the difference is very small and won’t make a big change; I’m not sure why they were changed in the first place. The strangest part of this is, before Nuke 15, the default Blinkscript node in Nuke would hold a Saturation kernel, which would basically be a Saturation node but made in blinkscript, the difference was.. that the coefficients were good. Again, I’m not sure why the Foundry updated the coefficients on the Blinkscript node but not on the Saturation one. This might be for retro-compatibility, but it’s been 30 years !

Anyway, if you do care about having the right coefficients on your saturation, you’d rather use an OCIO node, or a blinkscript kernel. Is it worth it ? It’s up to you. It won’t make a big difference on a single node scale, but it’s not about the size of the error, it’s about the number of errors that you have in your comp and multiply each other. This now leaves a question : should you use any saturation knob in your comp ?