It is quite common during VFX production that a few shots coming from the editing are getting some translate and scale transformations. Depending on the need for informations inside the frame, it can be decided that the artists will be given the original plate to get as much information as possible. Then the conformation is done during comp, at the beginning or the end, depending of where it makes the most sense. In the case were some CG needs to be integrated in the shots, the matchmove would also be done on the original plate, and that’s where the problem comes.

For the purpose of getting the right position and performance of the CG characters/elements, CG artists may need to know how the final image will be framed, this can be even more important if the 2D transformation is animated. The same kind of problem comes when the plate is retimed, and the camera is tracked from the non-retimed plate, but there are a lot of articles explaining how to retime a camera in Nuke so this isn’t a problem. This kind of problem came on a few projects I worked on, so I started experimenting on this and came with the following solution.

What do we want to achieve ?

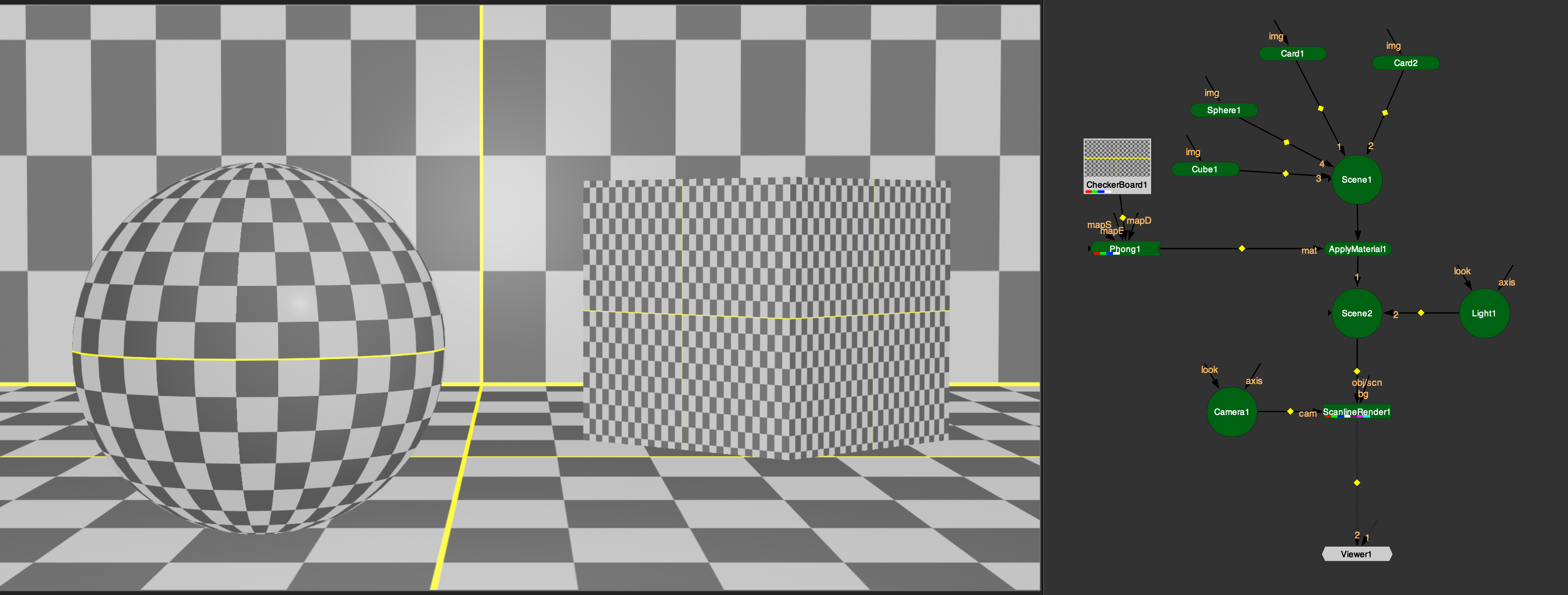

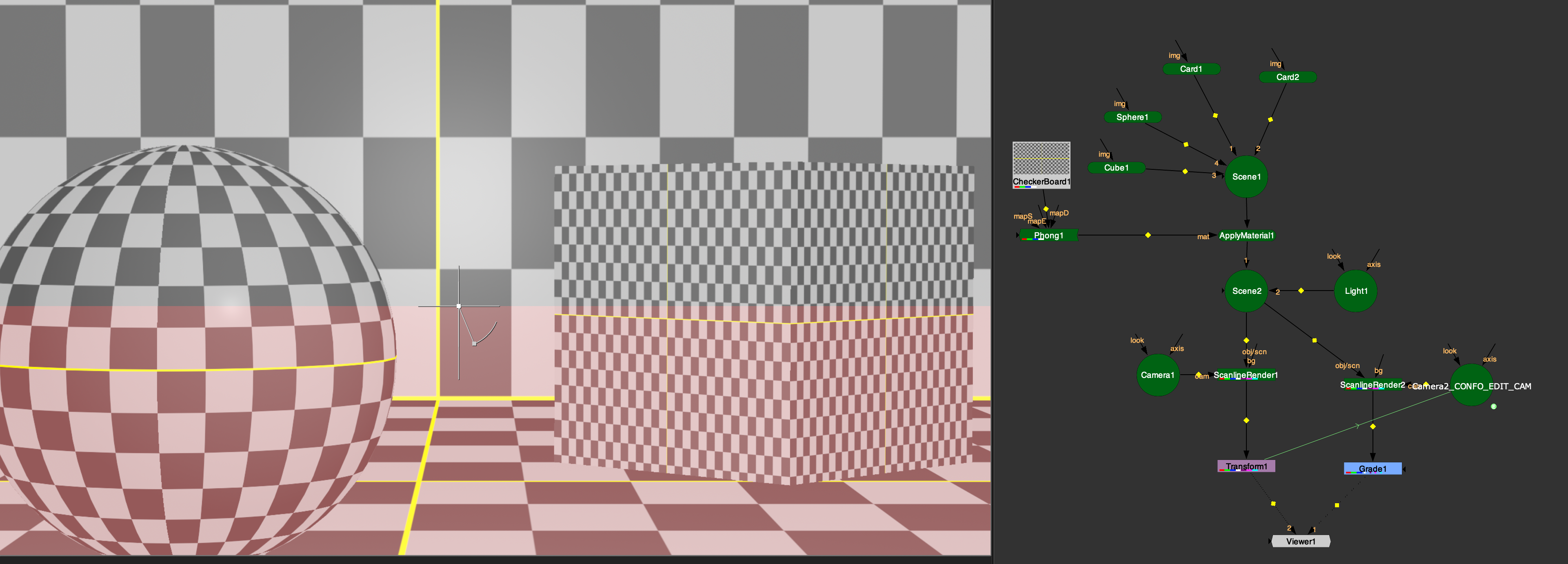

So what does this actually mean to reproduce a 2D confo on a 3D camera ? I started from the basics and used some tracked plate that we had at the time with their 3D geo. I reproduce a simple 3D setup in Nuke for the purpose of this article.

I then did a simple transform, using the Transform node, then try to get the same result only using the 3D world.

The simple approach

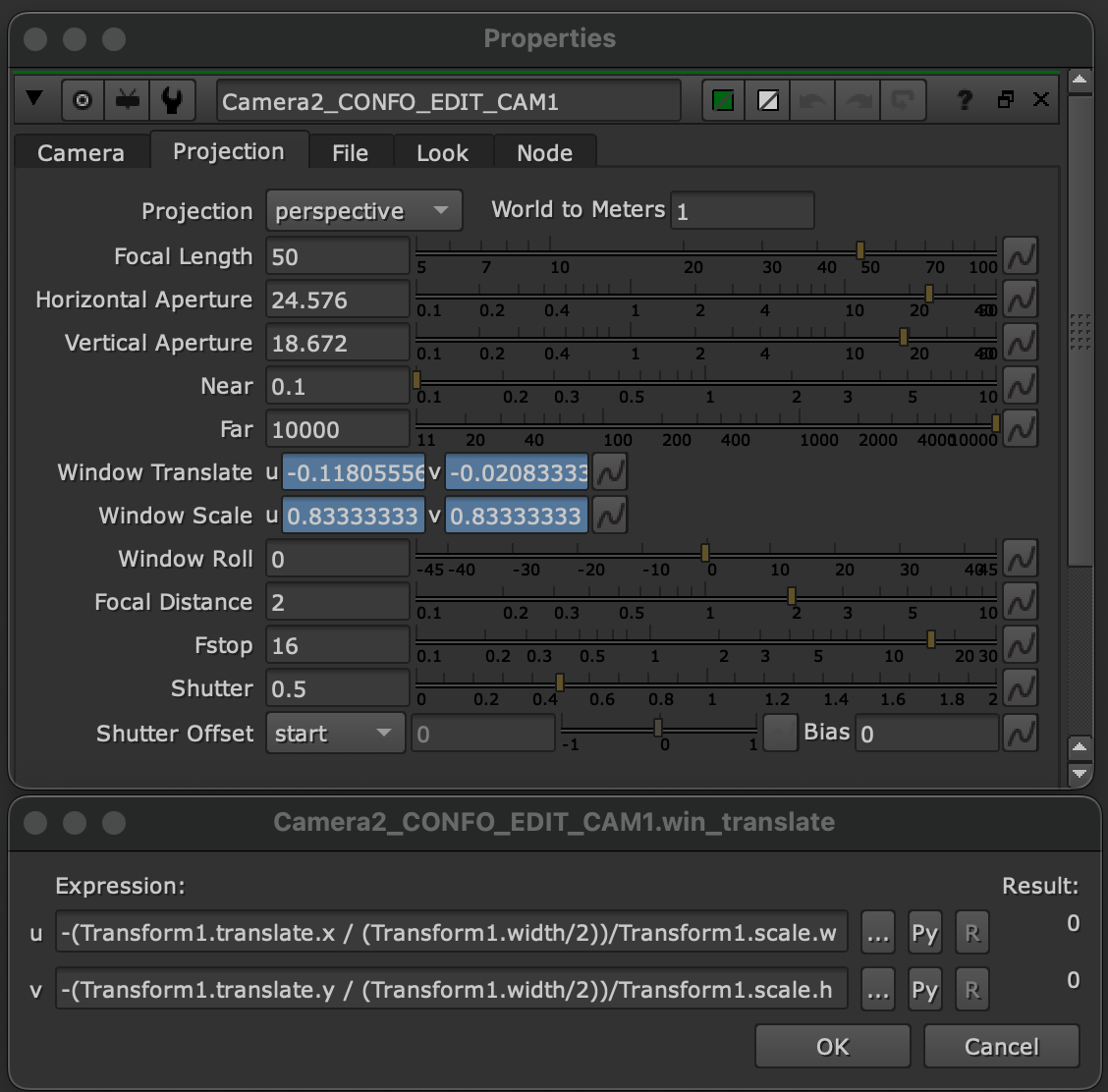

The secret is not to try to actually move the camera in space, because that would change the parallax of the 3D scene, so I went and try to move the sensor, which is called « Window Translate » and « Window Scale » in Nuke. This would probably be a terrible idea in real life, but in virtual world, this works. Translating and zooming the frame is as simple as offsetting and scaling the sensor. The only thing left is to find the equation that links the pixel transformation with the camera window, which is normalized, like the whole 3D space in Nuke. It turns out that the solution is much simpler if the pivot point stays in the center, as the camera’s window scale doesn’t have one.

For a centered pivot, the translate expressions are:

win_translate.x = -(Transform.translate.x / (Transform.width/2))/Transform.scale.w

win_translate.y = -(Transform.translate.y / (Transform.width/2))/Transform.scale.hIn both cases we divide by half of the width, because in Nuke the 3D space is normalized over le width. We need a minus at the beginning as the whole transformation are inverted. We then divide by the scale as it will affect the translation.

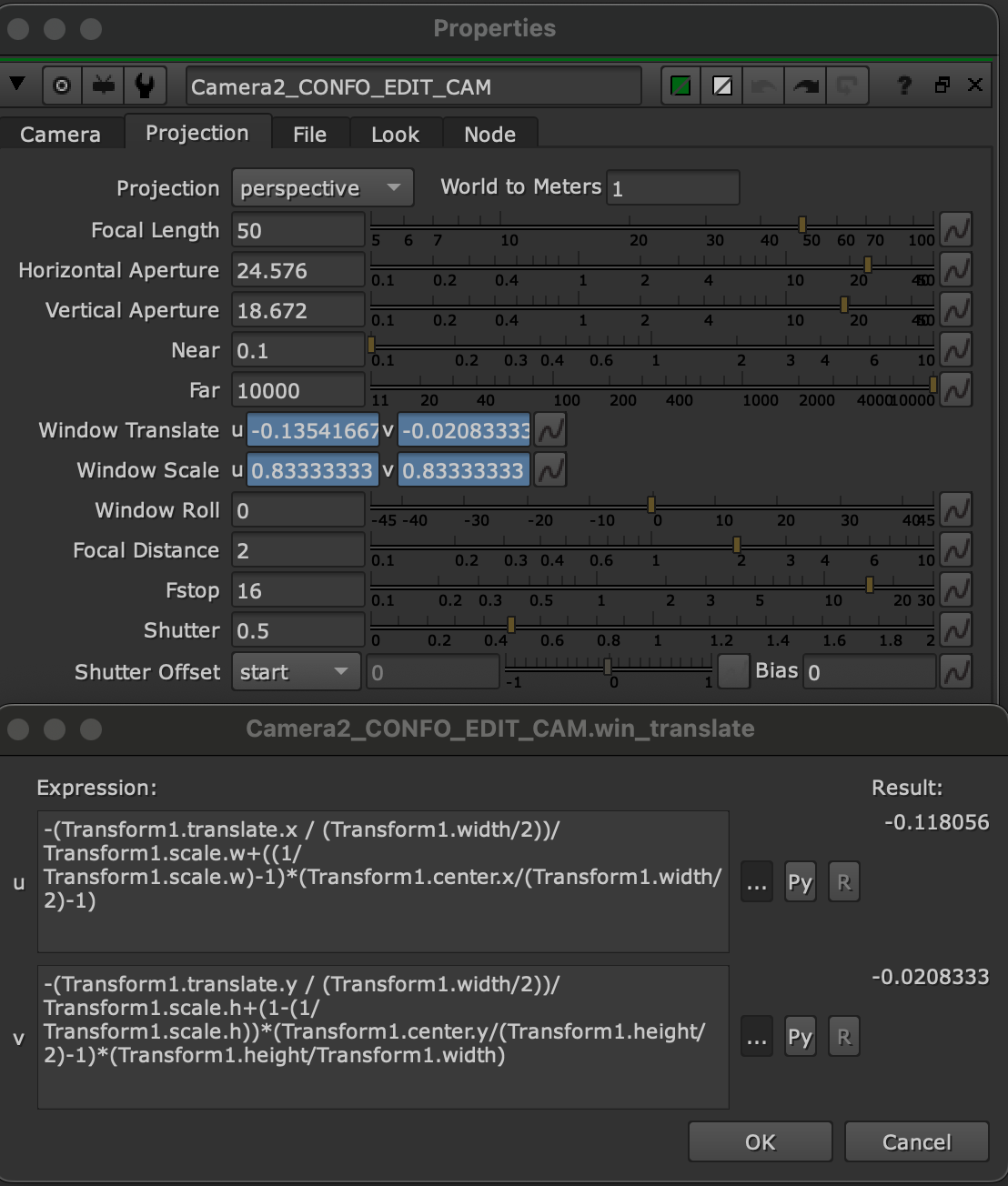

Playing with the pivot

If the pivot point is not centered, it’s another story… As the frame will translate compared to a centered pivot scale, we will have to « reproduce » the translation in the window translate. As bad in maths as I am, the solution was not so obvious to me so it implied a lot of trial and error, comparing the result that I had, combining all the parameters that I have in some ways until I get the same result. Sounds terrible I guess, but this works.

We first need to compare the position of the pivot with it’s natural centered position, so we will first calculate the center of the frame (width/2; height/2) and divide the pivot position by this value; if the returned value is 1, this means the pivot is centered, so we don’t want any offset. To actually have no translate added, we need to get 0 as a result, that’s why we’ll subtract 1. Which gives us for the width: (Transform.center.x/(Transform.width/2)-1).

This will offset by 1 if the pivot is on the right border of the frame, and -1 if it’s on the left border.

Now we want this offset to be driven by the actual scale value, as no offset will by added if there is no scale applied (scale value of 1). So we will invert the scale from the Transform, as the scale is inverted in camera (like in real life to make it short, with the same lens, the smaller the sensor, the bigger the object looks). Then again, we want to return 0 if the scale is 1, so we won’t subtract 1 to the scale, but subtract the scale to 1, so the offset goes in the right direction (try doing the inverse to see how this react). This parts gives us for the width : (1-(1/Transform.scale.w). Then have to multiply those two expressions and add them to our existing translation.

There is a small change for the y axis, as I said, the 3D system in Nuke is normalized over the width (the x axis), we have to multiply our y axis translation by the ratio of our frame, or more exactly the inverted ratio : (Transform.height/Transform.width). This will give us the right amount of translation.

Here’s the full expressions to put in your camera’s Window Translate :

win_translate.x = -(Transform.translate.x/(Transform.width/2))/Transform.scale.w+(1-(1/Transform.scale.w))*(Transform.center.x/(Transform.width/2)-1)

win_translate.y = -(Transform.translate.y/(Transform.width/2))/Transform.scale.h+(1-(1/Transform.scale.h))*(Transform.center.y/(Transform.height/2)-1)*(Transform.height/Transform.width)For the scale, the expression is much simpler, we just have to invert the Transform’s scale:

win_scale.x = 1/Transform1.scale.w

win_scale.y = 1/Transform1.scale.h

Note that we use the width info of the Transform, as there is a kind of bug in Nuke’s camera, the width and height variable won’t return the width/height of the project as we would expect, but 640/480 which the first format available in the project settings.

Also these variables, taken from the Transform will either return the values of the input, if the node is plug, or the root values, set in the project settings, so if your plate’s format doesn’t match your project settings format, make sure you plug the Transform in the plate before exporting the Camera.

The whole thing was wrapped in a small python script called transform_to_cam, and this is it. The user just have to select the transform node and the camera, and trigger the script. The camera is duplicated and linked to the transform. You can get a version of this python script on my Github to use it on your projects, or improve it where it needs. The scripts doesn’t take into account the rotation for now, as I haven’t found a need for it yet. This might come in a future update.

After the camera is exported using a WriteGeo (make sure to plug the camera into a scene because Nuke won’t let you plug the write directly into the cam), the next step is now in the hands of the 3D department. An image plane can be created in the 3D software with the original tracked camera, then the conformed camera can be imported and used to view the scene, framed just like in the edit.